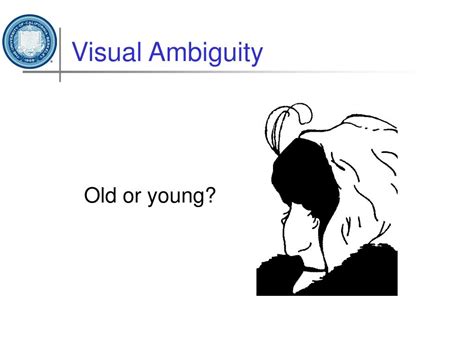

In the world of data management and user interface design, creating concise, error-free identification codes is paramount. The challenge magnifies when these codes need to be both machine-readable and human-friendly. One common pitfall involves the use of visually ambiguous characters—those pesky characters that look alike but represent different values, such as ‘O’ (the letter O) and ‘0’ (zero). These characters can cause significant confusion when codes are transcribed manually or communicated verbally.

Compounding the issue with visual ambiguity is the problem of phonetic similarity. For instance, the letters ‘B’ and ‘P’ can sound nearly identical when spoken out loud, particularly in noisy environments or over poor phone lines. Consideration of phonetic ambiguity is crucial, especially in industries where verbal communication of codes is common, such as customer service or in tech support roles. Often, adopting a standard phonetic alphabet like the NATO phonetic alphabet (Alpha, Bravo, Charlie, etc.) is recommended to mitigate such confusions.

Beyond the visual and phonetic, the risk of creating unintended words or offensive combinations when random strings are generated is another critical concern. This consideration leads to the exclusion of certain vowels and letters in various coding systems, aiming to prevent such faux pas. For example, by avoiding vowels, the likelihood of inadvertently configuring a real or offensive word is significantly minimized. This method, although seemingly simple, plays a crucial role in designing codes that maintain professionalism and respect across cultures and languages.

Furthermore, some advanced coding systems incorporate error-correction mechanisms to safeguard against misreads or transcription errors. Using checksums or including redundancy in code can drastically reduce the number of errors during the input process. Tools like the Reed-Solomon error correction are outstanding examples of how adding a few extra bits of data can help in correcting common entry mistakes, ensuring the reliability of the transmitted information.

Today, the debate continues about the best practices for creating these codes. While it’s tempting to use an extensive set of characters for more compact codes, adopting a more restrictive set that prioritizes clear differentiation and universal readability may be more beneficial in the long run. This approach aids not only in accessibility—ensuring that individuals across different linguistic and sensory capabilities can manage the information correctly—but it also beefs up security measures by reducing errors that could potentially lead to security breaches. The ongoing advancements in alphabetic and numerical systems must strive to balance inclusivity, comprehensibility, and security to meet the diverse needs of global users.

Leave a Reply