In the modern age of digital content, RSS feeds remain an invaluable tool for users who need a convenient way to stay updated with their favorite blogs and news sites. However, as outlined in various user comments on Rachel by the Bay’s recent post, managing the behavior of RSS feed readers can present significant challenges for server administrators. With some readers sending conditional requests every 5 to 10 minutes and others failing to adhere to HTTP headers like ‘Retry-After’ or ‘Cache-Control,’ the errant polling can lead to server overloads and exacerbate resource consumption. This calls for a balanced approach to both client and server responsibilities in maintaining an efficient system.

One of the key points raised in the discussion revolves around the Retry-After HTTP header, which is used predominantly to instruct the client on how long to wait before making a subsequent request. Commenter komadori aptly noted that receiving a `429 Too Many Requests` status code, accompanied by the Retry-After header, provides a clear guideline for clients to self-regulate their polling frequency. Still, as user veeti pointed out, this mechanism has its limitations, particularly if the feed reader unknowingly scrapes the feed just before new content is published, thus missing updates for extended periods. Incorporating a smarter strategy in feed readers, such as dynamically adjusting polling intervals based on historical publishing patterns, would mitigate this issue while alleviating server load.

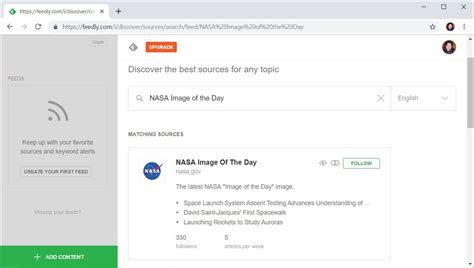

A promising solution that emerged from the comments is the implementation of WebSub (previously known as PubSubHubbub), a protocol designed for real-time notifications of feed updates. As supriyo-biswas mentioned, WebSub’s automatic updates can dramatically reduce the unnecessary burden on servers by pushing new content only when published. For instance, a central hub gathers updates from subscribed feeds and then pushes notifications to clients. By doing so, it drastically cuts down the frequency of fetch requests made by readers. Implementing WebSub should be a priority for feed publishers aiming for optimal resource usage, since it leverages existing web infrastructure without necessitating repeated, high-frequency checks from clients.

Commenter boricj highlighted another practical issue related to RSS feeds: the unnecessary consumption of bandwidth and CPU resources caused by poorly-behaved readers that do not support the `Accept-Encoding: gzip` header or bypass caching mechanisms. For example, readers that request feeds without setting appropriate headers download feeds uncompressed, resulting in excessive data transfers. This inefficiency is especially problematic for bloggers running their sites on limited infrastructure, such as a home NAS. To counter this, boricj suggests configuring servers to serve responses with the Cache-Control header, providing clear caching instructions to clients. Responses with tags like `Cache-Control: public, max-age=86400, stale-if-error=86400` ensure that the feed remains cached on the client side for 24 hours, reducing the need for frequent re-fetches. Adopting such practices not only eases server strain but also streamlines the browsing experience for end users.

The essence of the debate is the responsibility shared between server administrators and client developers. As noncoml remarked, controlling the polling interval is crucial regardless of the posting frequency or interval between posts. Clients should ideally respect the guidelines set by servers to avoid being rate-limited or even blocked. On the flip side, server administrators should ensure their feeds respect standard HTTP parameters and headers. For instance, utilizing `304 Not Modified` responses effectively checks whether the content has been updated since the client last retrieved it, thus minimizing transfer sizes and conserving resources.

Ultimately, RSS technology is far from outdated; it is held back primarily by the lack of adherence to best practices and the absence of more intelligent, adaptive behavior from both sides. Although feed technologies might seem niche or reserved for a specific subset of power users, they remain a critical tool for information aggregation and consumption. Therefore, ensuring that systems and protocols around RSS feeds are optimized for efficiency and reliability is essential. This balance can be achieved by adopting standards like WebSub, employing proper caching headers, and educating both feed reader developers and content creators about the implications of their implementation choices. The outcome would be a healthier internet ecosystem where dynamic content is delivered seamlessly without putting undue stress on the underlying infrastructure.

Leave a Reply