In a groundbreaking move, Apple introduced its new foundation models, which utilize both on-device and server-based capabilities to enhance the user experience. This dual approach signifies a significant leap in AI technology, allowing more sophisticated features to run seamlessly on Apple devices. Users can now benefit from a 3-billion-parameter model directly on their devices for everyday tasks, while more complex processes are handled by larger models on Apple’s servers. But, this innovation also brings several critical questions to the forefront, particularly regarding the use and collection of data.

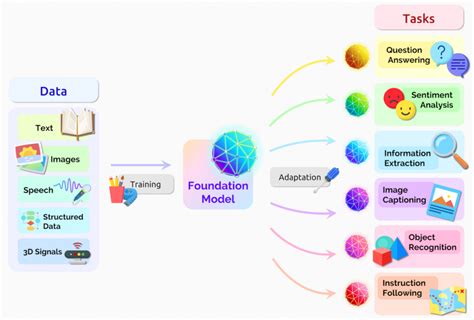

The mechanics behind Apple’s on-device model and server-based models are fascinating. On-device models operate without relying on cloud resources, utilizing the device’s hardware for efficiency and privacy. For instance, by incorporating various ‘adapters’ into the foundation model, Apple can dynamically specialize these models for specific tasks without overwhelming the device’s memory. This not only ensures the operating system’s responsiveness but also reduces the energy consumption essential for on-the-go usage. Yet, there remains ambiguity about when the system will switch between the on-device and server models, especially considering real-world scenarios may vary significantly.

Beyond technological marvels, the conversation around Apple’s foundation models quickly shifts to ethical concerns regarding data collection and usage. A major point of contention is Apple’s reliance on vast amounts of publicly available data to train these models. Critics argue that much of this data was collected without informed consent, akin to ‘pirating’ content for corporate gain. Apple asserts that web publishers can opt out of data usage by blocking the AppleBot, but the initial sprawling data collection likely occurred before many were aware of how to opt-out. This has led to a debate on the ethical implications of training AI on large datasets scraped from the internet.

The issue of copyright and ownership further complicates the narrative. While some commentators suggest that scraping publicly available data is akin to reading comments on a forum, others feel it indebts creators with no reciprocation. This touches on broader concerns about the sustainability of creative industries in the face of AI-generated content. As models evolve to mimic and reproduce human-like artistic output, the protective cushion of copyright may no longer suffice to encourage new artistic endeavors. Indeed, artist livelihoods and the whole ecosystem may be at risk as AI-generated media becomes more prevalent and accepted.

Moreover, Apple’s commitment to privacy and security is both lauded and scrutinized. They emphasize that their models are fine-tuned not only for performance but also for minimizing harmful outputs through rigorous human evaluation. Yet, skeptics argue that such claims require stringent, transparent verification processes. Though Apple has opened up about some of its privacy practices, the real test lies in independent audits and the robustness of these mechanisms over time. An example cited is how Apple’s use of adapters—small neural network modules—helps in dynamically sorting tasks on-the-fly, a technical marvel indeed, but its privacy assurances will need to withstand practical scrutiny.

In summary, Apple’s foundation models represent a significant technological advancement in AI, promising multi-faceted benefits in both performance and privacy. However, it also opens multiple ethical and practical dilemmas that necessitate broad discussions among technologists, ethicists, and legal experts alike. As Apple navigates this terrain, only time will tell how well these models are adopted and if they address the nuanced challenges they inherently bring with them. For developers and consumers, these models might open new doors, but the route to a fully ethical and balanced integration is fraught with complexities that must be addressed thoughtfully.

Leave a Reply