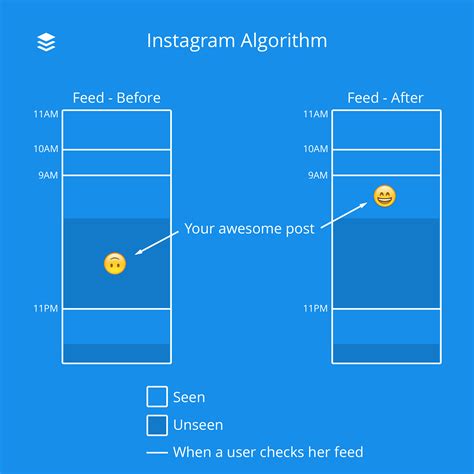

In an ironic twist that shines a glaring light on modern technological ethics, recent reports have shown that Instagram’s algorithm is recommending sexual content to accounts purportedly belonging to 13-year-olds. This revelation arrives amidst an increasingly complex landscape where social media platforms wield unprecedented power over our collective psyche. The question that arises isn’t just about the appropriateness of the content, but rather the deeper issue of how algorithms, driven by engagement metrics, can turn problematic almost under the radar.

The pressure on social media companies to keep users engaged has never been higher. In this context, the revelation by Wall Street Journal proves an unsettling truth: engagement, often measured in clicks and time spent on platform, tends to lead algorithms down a morally ambiguous path. When this path intersects with young, impressionable minds, it becomes a matter of grave concern. Congress seems unable, or unwilling, to act efficiently due to partisan gridlock and heavy lobbying by tech giants like Meta. This inertia turns what could be regulatory oversight into a Sisyphean endeavor.

One commenter astutely noted, ‘Maybe using opaque algorithms to score and assign prominence to specific content based on ill-defined metrics like

"engagement"

was a mistake.’ Algorithms don’t understand morality; their sole purpose is to maximize engagement, and sexual content, rather than safer alternatives, regrettably, has a higher engagement rate. This digital Darwinism isn’t without consequences, especially when users are too young to discern the ethical implications of content they consume.

There’s a philosophical lens to consider here. When an algorithm correctly identifies content that retains user interest but is inherently problematic, one might be tempted to blame human nature itself. However, saying ‘the problem lies with the person, not the algorithm’ is akin to allowing gambling without cautionary frameworks. We still keep children out of casinos because they lack the judgment necessary to make sound choices when exposed to adult stimulations.

The analogy between addictive substances and algorithm-driven content is also unavoidably stark. If a drug dealer determines that certain illegal substances will keep users hooked, does it shift the responsibility to the user alone? The collective ethical obligation to safeguard vulnerable demographics such as teenagers becomes evident. Another side of this argument is presented poignantly by those who say their lives were detrimentally affected by early and unfettered access to extreme content. The digital footprint extends long, and the echoes of what once was a mere exploration can ricochet into adulthood as significant challenges.

Historically, exposure to sexual content has been clandestine but largely limited by physical barriers—be it late-night TV or backroom video stores. However, in today’s hyper-connected world, children have a gateway of potential exposure right in their pockets, their phones, often without any of the previous barriers. The scale and degree of exposure are unparalleled, which makes the conversations around algorithm regulation urgent and necessary.

Interestingly, platforms like TikTok have managed to navigate these murky waters better than Instagram. According to various reports, TikTok’s algorithmic governance for young users is markedly more robust, curbing the display of adult materials even when such content is sought actively by minor accounts. This disparity in adherence to child protection norms is both striking and embarrassing for Meta, the parent company of Instagram, which has repeatedly assured the public of its commitment to user safety.

The problem with our present digital age isn’t just the presence of such content but its seemingly unstoppable promotion due to engagement algorithms. The legislative angle remains fraught with complications, as effective policy-making seems to lag woefully behind technological advancements. Yet, public sentiment is clear: social media companies must take more responsibility for the content they recommend, especially to vulnerable users like children.

The advent of Section 230, which shields tech companies from being liable for user-uploaded content, has arguably nurtured an environment ripe for abuse. Carve-outs and exceptions for egregious recommendations, like pushing sexual content to minors, could potentially ameliorate the situation. Users, on the other hand, wield tremendous power themselves. Abstaining from platforms that lack ethical rigor can serve as a potent message to these digital behemoths.

In conclusion, the debate is far from semantic nitpicking; it’s an urgent call for redefining technological ethics in a world driven by algorithms. As a society, we need to decide whose responsibility it is to navigate this tricky terrain – and how much say we are willing to give up in exchange for monetary gains these social media platforms promise. Until action is taken, be it through user choices, legislative mandates, or corporate introspection, the troubling trend of problematic algorithmic recommendations is likely here to stay.

Leave a Reply